TOC

前言

k8up 目前是 CNCF sanbox 项目,基于 restic 来做数据备份到 S3 以及将数据从 S3 恢复到 PVC 中.

可以一次性备份也可以定时备份,普通备份是通过一个 job 挂载 PVC 来备份数据,也可以通过特定的程序命令,例如mysqldump将数据库数据导出做备份.

前提

- kubernetes

- helm

对于kubernetes环境搭建,可以参考用kind搭建k8s集群环境

开始部署

部署k8up

首先需要先安装CRD:

kubectl apply -f https://github.com/k8up-io/k8up/releases/download/k8up-4.1.0/k8up-crd.yaml

使用 helm 部署 k8up

helm repo add k8up-io https://k8up-io.github.io/k8up

helm install k8up k8up-io/k8up

使用 helm 部署 minio,如果你已经有 s3 存储则这一步不是必须的.

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install minio bitnami/minio --set auth.rootPassword=password

使用 k8up 需要创建两个 secret,第一个是 k8up 与 minio(s3) 交互的账号密码信息.

第二个 secret 则是 k8up 用于加密备份数据的.

如果你已经有 s3 存储则这里需要填写对应的账号密码,但我推荐你首先使用 minio 来跑通一个测试来熟悉流程.

kubectl create secret generic minio-credentials --from-literal username=admin --from-literal password=password

kubectl create secret generic backup-repo --from-literal password=password

开始起飞

准备测试数据

创建一个 PVC 用于写入数据.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: using

annotations:

k8up.io/backup: 'true'

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 200Mi

创建一个 job 用于往 PVC 中写入数据

apiVersion: batch/v1

kind: Job

metadata:

generateName: writedata

spec:

ttlSecondsAfterFinished: 120

template:

spec:

containers:

- name: kubectl

image: busybox:1.35.0

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- date >> /data/log

volumeMounts:

- name: data

mountPath: /data

restartPolicy: OnFailure

volumes:

- name: data

persistentVolumeClaim:

claimName: using

创建一个 job 来查看当前使用的 PVC 中的数据.

apiVersion: batch/v1

kind: Job

metadata:

generateName: checkusing

spec:

ttlSecondsAfterFinished: 60

template:

spec:

containers:

- name: kubectl

image: busybox:1.35.0

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- cat /data/log

volumeMounts:

- name: data

mountPath: /data

restartPolicy: OnFailure

volumes:

- name: data

persistentVolumeClaim:

claimName: using

备份PVC数据到S3

k8up会选择带有 k8up.io/backup: true 这个 annotation 的 namespace 或 PVC 来进行备份.因此这里先为default namespace 添加k8up.io/backup: true的 annotation.

kubectl patch namespace default -p '{"metadata":{"annotations":{"k8up.io/backup":"true"}}}'

创建一个 Backup 资源来做一次备份:

apiVersion: k8up.io/v1

kind: Backup

metadata:

name: backup-test

spec:

failedJobsHistoryLimit: 2

successfulJobsHistoryLimit: 2

backend:

repoPasswordSecretRef:

name: backup-repo

key: password

s3:

endpoint: http://minio:9000

bucket: k8up

accessKeyIDSecretRef:

name: minio-credentials

key: username

secretAccessKeySecretRef:

name: minio-credentials

key: password

正常的话会看到有一个用于备份的 pod,这个 pod 是从 job 资源启动起来的.

lan@lan~$ kubectl get job

NAME COMPLETIONS DURATION AGE

backup-backup-test-0 1/1 10s 40s

checkusingmwddb 0/1 7m51s 7m51s

writedatalflgc 1/1 3s 45s

lan@lan~$ kubectl get po

NAME READY STATUS RESTARTS AGE

backup-backup-test-0-5lxhh 0/1 Completed 0 19s

k8up-86949c9c54-2rwn6 1/1 Running 0 13m

minio-cf5685cbb-zv9wc 1/1 Running 0 13m

writedatalflgc-tg5jj 0/1 Completed 0 24s

checkusingmwddb-gjgnf 0/1 Completed 0 36s

查看 backup pod 的日志:

lan@lan~$ kubectl logs backup-backup-test-0-5lxhh

2023-03-29T03:59:46Z INFO k8up Starting k8up… {"version": "2.6.0", "date": "2023-02-28T07:42:12Z", "commit": "6d8ab1d0c2bcfd3348e73b5298847c9641ca199e", "go_os": "linux", "go_arch": "amd64", "go_version": "go1.19.6", "uid": 65532, "gid": 0}

2023-03-29T03:59:46Z INFO k8up.restic initializing

2023-03-29T03:59:46Z INFO k8up.restic setting up a signal handler

2023-03-29T03:59:46Z INFO k8up.restic.restic using the following restic options {"options": [""]}

2023-03-29T03:59:46Z INFO k8up.restic.restic.RepoInit.command restic command {"path": "/usr/local/bin/restic", "args": ["init", "--option", ""]}

2023-03-29T03:59:46Z INFO k8up.restic.restic.RepoInit.command Defining RESTIC_PROGRESS_FPS {"frequency": 0.016666666666666666}

2023-03-29T03:59:48Z INFO k8up.restic.restic.RepoInit.restic.stdout created restic repository ec3ed0463f at s3:http://minio:9000/k8up

2023-03-29T03:59:48Z INFO k8up.restic.restic.RepoInit.restic.stdout

2023-03-29T03:59:48Z INFO k8up.restic.restic.RepoInit.rest

...

2023-03-29T03:59:51Z ERROR k8up.restic.restic prometheus send failed {"error": "Post \"http://127.0.0.1/metrics/job/restic_backup/instance/default\": dial tcp 127.0.0.1:80: connect: connection refused"}

github.com/k8up-io/k8up/v2/restic/cli.(*Restic).sendBackupStats

/home/runner/work/k8up/k8up/restic/cli/backup.go:95

github.com/k8up-io/k8up/v2/restic/logging.(*BackupOutputParser).out

/home/runner/work/k8up/k8up/restic/logging/logging.go:162

github.com/k8up-io/k8up/v2/restic/logging.writer.Write

/home/runner/work/k8up/k8up/restic/logging/logging.go:103

io.copyBuffer

/opt/hostedtoolcache/go/1.19.6/x64/src/io/io.go:429

io.Copy

/opt/hostedtoolcache/go/1.19.6/x64/src/io/io.go:386

os/exec.(*Cmd).writerDescriptor.func1

/opt/hostedtoolcache/go/1.19.6/x64/src/os/exec/exec.go:407

os/exec.(*Cmd).Start.func1

/opt/hostedtoolcache/go/1.19.6/x64/src/os/exec/exec.go:544

2023-03-29T03:59:51Z INFO k8up.restic.restic.backup backup finished, sending snapshot list

2023-03-29T03:59:51Z INFO k8up.restic.restic.snapshots getting list of snapshots

2023-03-29T03:59:51Z INFO k8up.restic.restic.snapshots.command restic command {"path": "/usr/local/bin/restic", "args": ["snapshots", "--option", "", "--json"]}

2023-03-29T03:59:51Z INFO k8up.restic.restic.snapshots.command Defining RESTIC_PROGRESS_FPS {"frequency": 0.016666666666666666}

可以看到最后的几行显示backup finished, sending snapshot list类似的字样说明备份成功了,前面有一段 Prometheus 相关的报错是由于 Backup 这个资源会将结果往 Prometheus 推送,但是本文的 backup 资源没有配置 Prometheus 地址,因此使用了本地默认的 Prometheus 地址导致发送失败.

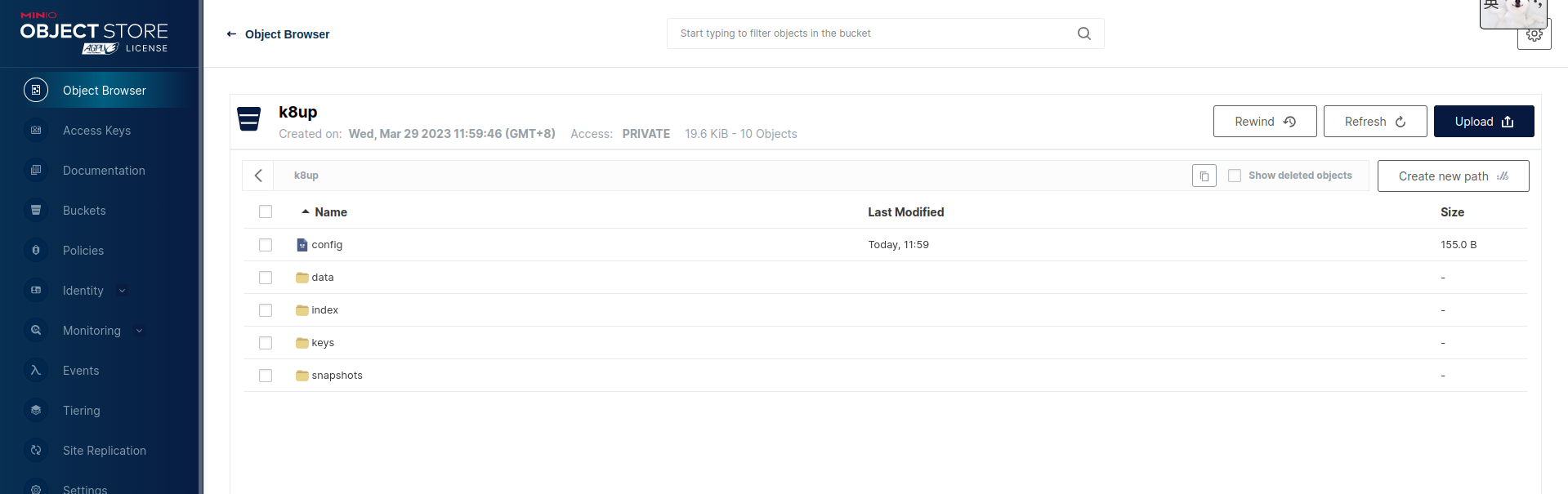

现在可以通过 minio 看一下是否在 s3 中已经有备份内容了.

可以看到 minio 中已经存有内容了,说明使用 k8up 备份 PVC 成功了.

还可以通过 kubectl 命令来查看 Backup 资源:

lan@lan:~$ kubectl get backup

NAME SCHEDULE REF COMPLETION PREBACKUP AGE

backup-test Succeeded NoPreBackupPodsFound 72m

接下来我们将这个备份在 s3 存储中的 PVC 数据恢复到另一个 PVC 中:

首先创建一个待用的 PVC:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: restore

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 200Mi

恢复数据到PVC

创建一个 k8up 的 Restore 资源,用于将数据恢复到 PVC:

apiVersion: k8up.io/v1

kind: Restore

metadata:

name: restore2pvc

namespace: default

spec:

backend:

repoPasswordSecretRef:

name: backup-repo

key: password

s3:

endpoint: http://minio:9000

bucket: k8up

accessKeyIDSecretRef:

name: minio-credentials

key: username

secretAccessKeySecretRef:

name: minio-credentials

key: password

restoreMethod:

folder:

claimName: restore

lan@lan~$ kubectl get po

NAME READY STATUS RESTARTS AGE

backup-backup-test-0-5lxhh 0/1 Completed 0 59m

checkrestorekdgbf-7kp6l 0/1 Completed 0 65m

k8up-86949c9c54-2rwn6 1/1 Running 0 72m

minio-cf5685cbb-zv9wc 1/1 Running 0 72m

restore-restore2pvc-m6qh2 0/1 Completed 0 54s

同样的,Restore 也会创建一个 job 来做恢复数据到 PVC 的工作,这里可以看到 Restore 的 pod 已经处于 Completed 状态,说明恢复操作完成了.

现在,我们来检查一下这个 PVC 是否已经有从 s3 存储中恢复的数据:

创建一个 job 来查看通过备份恢复的 PVC 中的数据:

apiVersion: batch/v1

kind: Job

metadata:

generateName: checkrestore

spec:

ttlSecondsAfterFinished: 60

template:

spec:

containers:

- name: kubectl

image: busybox:1.35.0

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- cat /data/log

volumeMounts:

- name: data

mountPath: /data

restartPolicy: OnFailure

volumes:

- name: data

persistentVolumeClaim:

claimName: restore

lan@lan~$ kubectl get po

NAME READY STATUS RESTARTS AGE

backup-backup-test-0-5lxhh 0/1 Completed 0 61m

checkrestore7rxfq-84bw6 0/1 Completed 0 5s

k8up-86949c9c54-2rwn6 1/1 Running 0 75m

minio-cf5685cbb-zv9wc 1/1 Running 0 74m

restore-restore2pvc-m6qh2 0/1 Completed 0 2m58s

lan@lan~$ kubectl logs checkrestore7rxfq-84bw6

Wed Mar 29 03:53:35 UTC 2023

Wed Mar 29 03:59:40 UTC 2023

可以看到 Restore 这个 PVC 中已经有之前写入的数据了.

总结

可以看到上述的演示效果是 k8up 会创建一个挂载了 PVC 的 job,然后通过将 PVC 的内容备份到 S3 中,因此这可以适用于绝大部分场景,但是对于部分场景来说是不足够的,例如备份 MySQL 数据库,如果直接备份 PVC 内的文件的话会发现可能少了数据,这是由于 MySQL 还没将数据落盘时直接备份文件是不可行的,但是可以通过使用 mysqldump 命令来导出数据,然后将结果做备份操作.

具体可以看看官网介绍:备份方式

对于数据恢复,依然也是通过一个 job 来挂载 PVC 并且将 S3 内的备份数据写入到 PVC 中.

上述的逻辑都是基于 restic 来做的.

由于 k8up 目前是 CNCF 项目,而我也很乐意研究 CNCF 基金会下的相关技术,可以加我微信表示你对 k8up 后续文章的期待,从而推动我更新后续内容:)

相关链接:

微信公众号

扫描下面的二维码关注我们的微信公众号,第一时间查看最新内容。同时也可以关注我的Github,看看我都在了解什么技术,在页面底部可以找到我的Github。